You may have already wondered why an individual area or even your entire domain is falling in the ranking positions, although in your opinion you have already done everything in your power to be clean. You adhere to all common standards, your site is high-performance, secure, structured, naturally linked and provided with high-quality content. Nevertheless, the rankings on Google and co are not what you expect. The reasons are often what we want to call “black sheep” here.

What are black sheep?

There are articles that Google doesn’t like for a variety of reasons. The effect can be that this one article, the category it belongs to or the entire website is penalized. This can be expressed by a slight reduction in the rankings or by a penalty, which has a far greater impact on the rankings.

If you’ve already implemented our checklist for high quality websites, there may be an area left that is constantly on the move on Google: the authority of your website in relation to a certain topic. It might sound like you couldn’t do anything about it, but that’s not true.

Often it is a few individual articles – maybe 3 out of 76 – that Google considers sensitive.

A silent contribution

An example of such an article could be that you take up a topic relevant to health, about which you do not have sufficient knowledge from Google’s point of view. Search engines simply want to prevent this “layman’s tip” from spreading around the world – and that’s right, of course.

How do you recognize these problem child contributions?

For the problem of detecting black sheep, we have developed two features in our SERPBOT:

- A keyword filter with a graph that gives you the ranking history of the filtered keywords. That may sound trivial, but this often provides extremely interesting findings, as can be seen in the following examples.

- A special feature “Rankings per URL”, which shows you the temporal course of the mean values of your rankings for the selected subpage. With this tool you can see in a very targeted manner whether Google treats certain sub-pages disadvantageously. The rankings of all keywords that have ever received rankings for the respective subpage are taken into account when calculating and displaying the mean values.

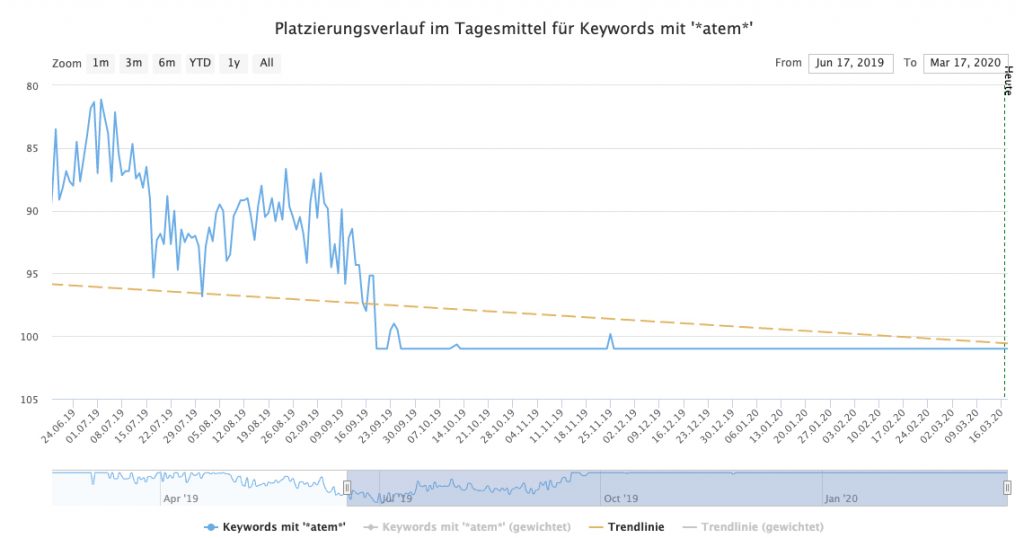

Example #1: *atem*

Here we see the progression curve of the rankings of articles that contained “* atem *” and were systematically downgraded by Google in the rankings – until they finally no longer had any rankings.

Ranking slump sample keywords to catch your breath

Let’s look at another example.

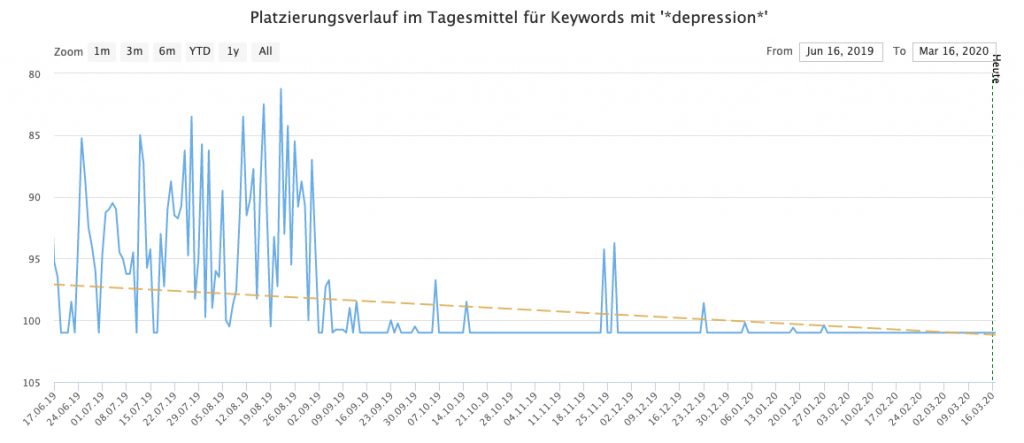

Example #2: *depression*

Here we see the ranking history of some articles that contained the word “* Depression *”. These articles also got worse and worse rankings and were finally removed from the index, so that a zero line was created.

Ranking slump example keywords related to depression

This way we can clearly see that some articles seem to be considered problematic by Google. Here, of course, the Google Medic update immediately comes to mind, which classifies health-related content as critical if the respective page is not an authority in this area. This is of course understandable, nobody wants advice that is incomplete or even harmful to health.

How can one deal with these articles?

We can assume that Google will also treat the rest of the page “neglected” if some articles fall outside the box in terms of content and / or authoritarianism. After all, Google wants to prevent these articles from being found. It doesn’t help much if the rest of the page is glossy and the other articles are left with strong rankings.

Various precautions can be taken so as not to let the rest of the page suffer from the effect of negative articles.

This includes:

- The overhaul of these black sheep: If you trust yourself, give these articles a major overhaul. This should include both the content-editorial revision and the review of external links to and from these articles. Here, of course, there is initially the risk that these optimizations will not result in any changes.

- Separating these articles on a different domain: You can set up a second website, where potentially critical articles find their shelter. From there, a possible penalty for this content no longer has a negative effect on your main domain. Avoid bidirectional links between the two domains here.

- Deleting these articles: When in doubt, the most sustainable means of course is to simply remove black sheep. Of course, this will only make sense if these articles form an exception to the content of the rest of the website, so that your site does not depend on these contributions.

What do we learn from it?

The little sheep conclusion

There are articles that Google regards as critical due to a lack of content authority on your domain. These contributions must be identified, changed, moved or removed through creative and tool-supported work.

You can then monitor your rankings again and check whether there is a change in the mean values of the rankings across your domain. In the above two examples, the affected articles were removed and the website recovered completely after about four weeks.

We wish you every success in identifying and treating your black sheep!